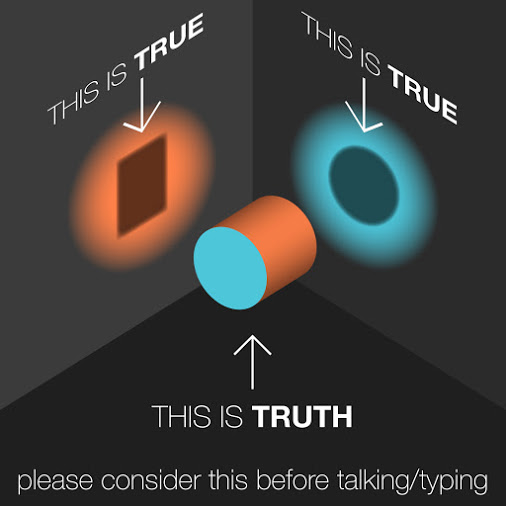

A work of art (specifically, literature, including poetry such as song lyrics) does not necessarily reveal the life or personality of the artist. Agatha Christie and Dorothy Sayers didn't make a habit of committing murders. Stephen King has probably never met a vampire or an extradimensional shapeshifter, and although he incorporated his near-fatal traffic accident into the Dark Tower series, I doubt he actually encountered his gunslinger Roland in person. Robert Bloch, reputed to have said he had the heart of a small boy -- in a jar on his desk -- was one of the nicest people I ever met. As Mercedes Lackey has commented on Quora, she doesn't keep a herd of magical white horses in her yard. Despite the preface to THE SCREWTAPE LETTERS, it seems very unlikely that C. S. Lewis actually intercepted a bundle of correspondence between two demons. And, as a vampire specialist, I could go on at length (but I won't) about the literary-critical tendency to analyze DRACULA as a source for secrets about Bram Stoker's alleged psychological hangups.

C. S. Lewis labeled the practice of trying to discover a writer's background, character, or beliefs from his or her work "the personal heresy." Elsewhere, writing about Milton, he cautioned against thinking we can find out how Milton "really" felt about his blindness by reading PARADISE LOST or any of his other poetry.

The Personal HeresyAn article by hip-hop musician Keven Liles cautions against analyzing songs in this way and condemning singers based on the contents of their music, with lyrics "being presented as literal confessions in courtrooms across America":

Art Is Not EvidenceSome musicians and other artists have been convicted of crimes on the basis of words or images in their works. Liles urges passage of a law to protect creators' First Amendment rights in this regard, with narrowly defined "common-sense" exceptions to be applied if there's concrete evidence of a direct, factual connection between a particular work and a specific criminal act.

This kind of confusion between art and life is why I'm deeply suspicious of child pornography laws that would criminalize the broad category of "depicting" children in sexual situations. A description or drawing/painting of an imaginary child in such a situation, however revolting it may be, does no direct real-world harm. Interpreted loosely or capriciously, that kind of law could be read to ban a novel such as LOLITA. Would you really trust a fanatical book-banner or over-zealous prosecutor or judge to discern that the repulsive first-person narrator is thoroughly unreliable and that his self-serving claims about his abusive relationship with a preteen girl are MEANT to be disbelieved?

Many moons ago, in the pre-internet era, a friend of mine who wasn't a regular consumer of speculative fiction read my chapbook of horror-themed verse, DAYMARES FROM THE CRYPT. To my suprise, she expressed sincere worry about me for having such images in my head. Not being a habitual reader of the genre, she didn't recognize that the majority of stuff in the poems consisted of very conventional, widely known horror tropes. Even the more personal pieces had been filtered through the "lens" of creativity (as Liles puts it in his essay) to transmute the raw material into artifacts, not autobiography.

In case you'd like to check out these supposedly disturbing verse effusions, DAYMARES FROM THE CRYPT -- updated with a few later poems -- is available in a Kindle edition for only 99 cents, with a cool cover by Karen Wiesner:

DaymaresMargaret L. Carter

Please explore love among the monsters at Carter's Crypt.