Can a computer program read thoughts? An experimental project uses AI as a "brain decoder," in combination with brain scans, to "transcribe 'the gist' of what people are thinking, in what was described as a step toward mind reading":

Scientists Use Brain Scans to "Decode" ThoughtsThe example in the article discusses how the program interprets what a person thinks while listening to spoken sentences. Although the system doesn't translate the subject's thoughts into the exact same words, it's capable of accurately rendering the "gist" into coherent language. Moreover, it can even accomplish the same thing when the subject simply thinks about a story or watches a silent movie. Therefore, the program is "decoding something that is deeper than language, then converting it into language." Unlike earlier types of brain-computer interfaces, this noninvasive system doesn't require implanting anything in the person's brain.

However, the decoder isn't perfect yet; it has trouble with personal pronouns, for instance. Moreover, it's possible for the subject to "sabotage" the process with mental tricks. Participating scientists reassure people concerned about "mental privacy" that the system works only after it has been trained on the particular person's brain activity through many hours in an MRI scanner. Nevertheless, David Rodriguez-Arias Vailhen, a bioethics professor at Spain's Granada University, expresses apprehension that the more highly developed versions of such programs might lead to "a future in which machines are 'able to read minds and transcribe thought'. . . warning this could possibly take place against people's will, such as when they are sleeping."

Here's another article about this project, explaining that the program functions on a predictive model similar to ChatGPT. As far as I can tell, the system works only with thoughts mentally expressed in words, not pure images:

Brain Activity Decoder Can Read Stories in People's MindsResearchers at the University of Texas in Austin suggest as one positive application that the system "might help people who are mentally conscious yet unable to physically speak, such as those debilitated by strokes, to communicate intelligibly again."

An article on the Wired site explores in depth the nature of thought and its connection with language from the perspective of cognitive science.

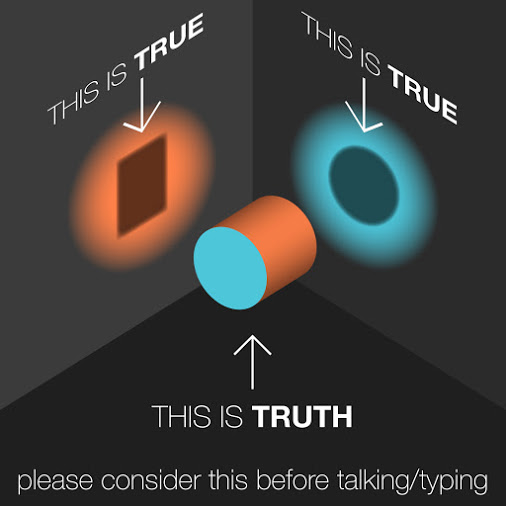

Decoders Won't Just Read Your Mind -- They'll Change ItSuppose the mind isn't, as traditionally assumed, "a self-contained, self-sufficient, private entity"? If not, is there a realistic risk that "these machines will have the power to characterize and fix a thought’s limits and bounds through the very act of decoding and expressing that thought"?

How credible is the danger foreshadowed in this essay? If AI eventually gains the power to decode anyone's thoughts, not just those of individuals whose brain scans the system has been trained on, will literal mind-reading come into existence? Could a future Big Brother society watch citizens not just through two-way TV monitors but by inspecting the contents of their brains?

Margaret L. Carter

Please explore love among the monsters at Carter's Crypt.