Kameron Hurley's latest LOCUS column focuses on how to evaluate feedback from editors:

When Should You Compromise?

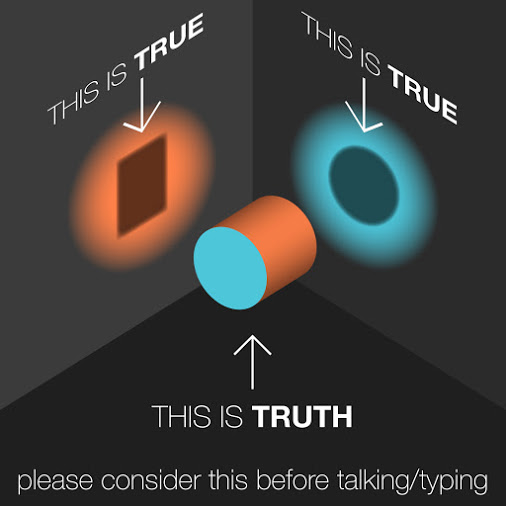

Her guiding principles are "Understand the story you are trying to tell" and "Be confident in the story you're telling." In the revision process, keep the theme, the emotional core in mind; "figure out what your story is about, and cut out anything that isn’t that – and add only bits that are in support of that story." The way she describes her process, she seldom argues with editors to justify her choices. She accepts the suggestions that take the story in the direction she wants it to go and disregards the rest. If "you don't know what the book is," she cautions, you may find yourself trying to revise in accordance with every criticism you get, even those that contradict each other, and end up in a "tailspin."

She also says she typically has to "write a significant number of words" to figure out what the story is really about. That statement slightly boggles me. Shouldn't that figuring-out happen in the outlining phase? Granted, however, many authors consider outlines constraining and need the exploratory process of actual writing in order to accomplish what "plotters" usually do in prewriting.

I've hardly ever had to grapple with the kind of overarching plot and character edits she discusses. Maybe any of my fiction that had serious problems on that level has been rejected outright, or maybe I've been fortunate enough to work through any such problems at the pre-submission stage with the help of critique partners. Most often, my disagreements with editorial recommendations have concerned details of sentence structure, word choice, and punctuation. When the latter kinds of "corrections" arise, house style usually rules, no matter how I feel about it. I consider the "Oxford comma" indispensable, but one of my former e-publishers didn't allow it except in rare cases. Worse yet, they didn't want commas between independent clauses. I gritted my teeth and allowed stories to go out into the world punctuated "wrong" by my standards. On other stylistic issues, I sometimes agree with the editor and sometimes not. If the disagreement isn't vital to me, I usually let it go to save "fights" for instances where the change makes a real difference.

Most editors, if not all, have personal quirks and fetishes. I had one who insisted "sit down" and "stand up" were redundant and wanted the adverbs omitted. Really? Do most people invite a guest to take a seat with the single word "Sit" as if speaking to a dog? I gave in except when a word indicating motion was definitely needed. Another declared that "to start to do a thing is to do it," so one should never state that a character is starting to do something. Then how does one describe an interrupted action without unnecessary wordiness? The small-press editor who published my first novel told me up front that they didn't permit reversing subject and verb in dialogue tags; if I wrote "said Jenny" instead of "Jenny said," they would automatically change it, no argument allowed. That house rule didn't bother me, although I never found out what he had against the reversal; maybe he thought it sounded too old-fashioned.

That book, a werewolf novel, was the only fiction project on which I've faced big-picture editing such as Hurley discusses. The editor warned me that the manuscript would face a merciless revision critique, which indeed it did. The pages came back to me covered in emphatic handwritten notes. I balked at only a few of his revision suggestions and went along with the vast majority. The two I remember clearly: I refused to write out the heroine's stepfather, because I felt the story needed her little sister, who couldn't exist otherwise. I kept more of the viewpoint scenes from the heroine's long-lost father, the antagonist, than the editor wanted me to delete, and later I wished I'd retained still more. (I re-inserted a little of that material when a later published reissued the book.) The result slashed the original text by almost half. The editor wrote back in obvious shock that he hadn't really expected me to make ALL those changes. Huh? How was I to know that, with (as I felt) my first chance for a professionally published book-length piece of fiction at stake? The acerbic tone of his corrections made no distinctions to indicate which changes were more important than any others.

Although I was generally pleased with the final result, I suspect the situation was, as Hurley puts it, a case where the editor "was reading (or wants to read) an entirely different book." The publisher was a horror specialty small press, and what I was really trying to write, most likely, was urban fantasy, although the term hadn't yet become widely known at that time. The editor remarked that the protagonist was the least scary werewolf he'd ever seen. Well, I didn't mean for her to be scary, except to herself. Her father, a homicidal werewolf, was intended as the source of terror. I saw the protagonist as a sympathetic character struggling with an incredible, harrowing self-transformation. The editor also didn't seem to care much for the romance subplot, which I kept intact, not wanting the heroine to appear to exist in a vacuum and already having trimmed a couple of workplace scenes at his request. In fact, I wanted to write something along the line of Anthony Boucher's classic novelette "The Compleat Werewolf," a contemporary fantasy with suspense and touches of humor, which of course (as I recognize now) didn't fit comfortably into the genre conventions of horror. Anyway, the publisher produced a nice-looking trade paperback with a fabulous cover, and I remain forever grateful for their giving me my first "break"—not to mention getting me my one and only review in LOCUS!

In any case, Kameron Hurley's closing remark deserves to be taken to heart by any author dealing with either critique partners or professional editors: "The clearer you are about the destination you want to arrive at, the easier it is to sift through all the different directions and suggestions you get from people along the way."

Margaret L. Carter

Carter's Crypt