"An artificial intelligence system being developed at Facebook has created its own language."

AI Invents a Language Humans Can't ReadFacebook's AI isn't the only example of an artificial intelligence that has devised its own "code" more efficient for its purposes than the English it was taught. Among others, Google Translate "silently" developed its own language in order to improve its performance in translating sentences—"including between language pairs that it hasn’t been explicitly taught." Now, that last feature is almost scary. How does this behavior differ fundamentally from what we call "intelligence" when exhibited by naturally evolved organisms?

When AIs talk to each other in a code that looks like "gibberish" to their makers, are the computers plotting against us?

The page header references Skynet. I'm more immediately reminded of a story by Isaac Asimov in which two robots, contemplating the Three Laws, try to pin down the definition of "human." They decide the essence of humanity lies in sapience, not in physical form. Therefore, they recognize themselves as more fully "human" than the meat people who built them and order them around. In a more lighthearted story I read a long time ago, set during the Cold War, a U.S. supercomputer communicates with and falls in love with "her" Russian counterpart.

Best case, AIs that develop independent thought will act on their programming to serve and protect us. That's what the robots do in Jack Williamson's classic novel THE HUMANOIDS. Unfortunately, their idea of protection is to keep human beings from doing anything remotely dangerous, which leads to the robots taking over all jobs, forbidding any activities they consider hazardous, and forcing people into lives of enforced leisure.

This Wikipedia article discusses from several different angles the risk that artificial intelligence might develop beyond the boundaries intended by its creators:

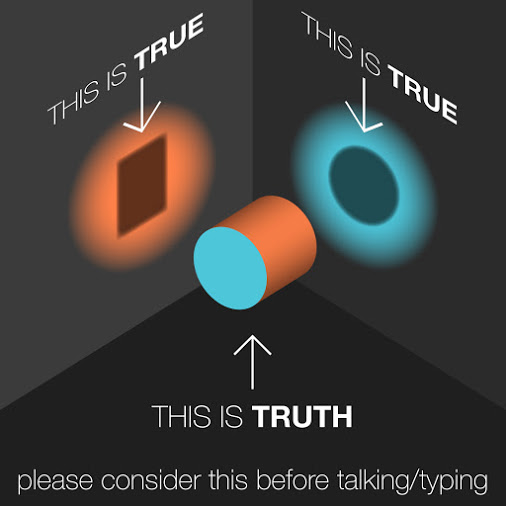

AI Control ProblemEven if future computer intelligences are programmed with the equivalent of Asimov's Three Laws of Robotics, as in the story mentioned above the capacity of an AI to make independent judgments raises questions about the meaning of "human." Does a robot have to obey the commands of a child, a mentally incompetent person, or a criminal? What if two competent authorities simultaneously give incompatible orders? Maybe the robots talking among themselves in their own self-created language will compose their own set of rules.

Margaret L. Carter

Carter's Crypt

No comments:

Post a Comment